As quickly as AI captured the attention of every agency and marketer, the dark side of AI has captured as much attention – maybe more.

The potential of AI is so seductive even though people understand AI can go wrong or hallucinate, people, more or less, accept the failure rate.

This attitude makes sense when there is not much to lose, as in creating a resume. Mistakes are easy to spot and correct. However, in business, AI misfires are hard to detect and they can have significant consequences maybe even catastrophic impacts. An AI misfire can cause a brand to post content that is dangerous or a marketer to waste a lot of advertising budget chasing the wrong audience.

Therein lies the excruciating tension for marketers; AI has a lot of efficiency to offer but the clear and present danger of AI misfires creates ever-widening AI trust gaps.

While AI companies are building ever-more powerful tools, they are taking short cuts as Jason Allen Synder (IPG) noted recently in a LinkedIn post: “Meta just proved what many of us have warned: “ship fast, fix later” in AI isn’t just reckless. It’s lethal,” (LinkedIn)

While the big companies are taking short cuts, everyone is constantly looking over one shoulder, worrying if the potential upside is worth the risks.

It is clear we cannot count on the large companies to create the right foundation for AI that can be trusted. It is equally clear we cannot trust big AI companies to do the right thing.

This is exactly the moment when redemption will come from the most unexpected place – innovative companies who take the time to get it right. This is the moment when marketers can trust AI because it is AI that was developed for marketers by marketers.

This is exactly the moment for a change in the conversation by powering AI with the intelligence of context.

Why AI Hallucinations (and Misfires) Happen.

We should recognize that AI is unlike previous technologies because AI can do two things past technologies could not do: AI can make decisions and AI can “create” new ideas. This puts AI in a new category of technology that might be better characterized as “Alien Intelligence.”

This alien intelligence is a black box even to those who develop it. Try to fix a bug in AI is actually much harder than it seems because AI creates answers in its own way making it very hard to reverse engineer especially when AI dreams or hallucinates. Yet technically, AI can get it wrong on a few levels and for different reasons. (For a deeper explanation, please see https://trustwebtimes.com/does-ai-dream/)

1) AI Dreaming: AI can get “over stimulated” during a computer routine much the same way a 5-year-old can have nightmares when the child watches a scary movie right before bed. This AI dream-state can occur as a result of an AI training session or when the AI has “experience replay” in trying to process a lot of data.

2) AI Hallucinations: Hallucinations, on the other hand, are different than AI dreams. Scientists call the fictional stuff AI makes up as hallucinations. “…AI fabricates information entirely, behaving as if they are spouting facts. These hallucinations are particularly problematic in domains that require multi-step reasoning…” (Source: https://www.cnbc.com/2023/05/31/openai-is-pursuing-a-new-way-to-fight-ai-hallucinations.html).

Hallucinations happen because, its heart AI is a completion engine. AI’s “compulsion” is to “complete” a query and provide answers – no matter what. This is the basis for hallucinations even when it lacks information to shape its answer.

3) AI Misfires: AI does not understand facts from fiction. Therefore, if AI does not “understand” the question, it will provide whatever the completion engine “thinks” makes sense. Right or wrong is not the main concern of AI – completing the query is.

Bottom line – the framework to distinguish between the real and the false is not baked into AI technology.

Does this mean we are doomed to live with AI that can cannot be trusted? No. The answer lies in giving AI context just like we humans use every minute of every day. Here’s what that looks like.

Creating AI Trust Is All About Context.

Humans can function because we can apply context to what we see, hear, and experience. AI tools from the big companies lack a context data layer, a critical missing link in preventing AI mistakes. Without context, AI can wander into whatever data nook or cranny it wants to. Without context, AI has no guardrails to shape its output.

The lack of a context layer is an especially difficult gap for marketers because out of context communications does more harm than good.

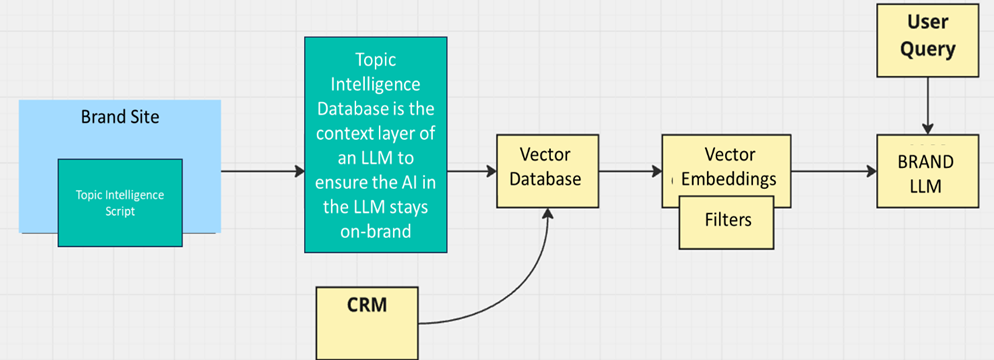

Technically, the answer lies in a brand implementing a contextual data layer to a brand’s LLM model. In this approach, the context data layer clusters brand-related topics which then behave as a truth filter and guide to keep the AI wheels on the brand trolley instead of allowing AI to wander wherever its static and generic training takes it (Figure A). This brand-centric knowledge base provides an intelligent information architecture system that provides an AI with:

- Proprietary Data: This includes a brand’s knowledge base—product information (web-based), documented policies, marketing copy, topics that drive engagement/ outcomes and customer relationship history. This is the information that the AI’s public training data could never know.

- Real-time Information: A contextual layer can be integrated with live data feeds, such as a company’s web content, CRM system, current company news, or even channel performance. This ensures the AI’s responses are always on brand, up-to-date and relevant.

- Business Rules and Guardrails: This layer enforces specific rules that dictate how the AI should behave. It can define the brand’s tone of voice, what topics are off-limits, and how to address a branding crisis.

Why Contextual Data Can Prevent AI Hallucinations and Misfires

AI hallucinations and mistakes occur because AI in large language models are, in effect, “completion engines.” They are designed to predict the most probable word or phrase based on the patterns they learned from their training data. The training data itself is expansive in scope but lacks a true understanding of facts or a connection to real-world, brand information.

More important, everything in AI’s training knowledge base is of equal value to everything else.

However, in the real world, context provides the connective tissue of trusted comprehension.

This is why a contextual layer can directly address the core trust issues with AI:

1. Context Plants AI Firmly in Brand Relevant Soil: When a user asks a question, the contextual layer first searches its proprietary data for the answer. The AI is then instructed to generate a response based on that specific, verified information, rather than pulling from its general, unverified knowledge base.

2. Eliminates Guesswork: Without a contextual layer, an AI might “hallucinate” an answer to a question it doesn’t know. By providing the AI with the most accurate and current brand information, the contextual layer eliminates the need for the model to guess, dramatically reducing the risk of generating false information.

3. Enforces Boundaries: The rules within the contextual layer acts as a safety net. For example, a customer service AI can be given a rule to never provide a specific medical recommendation or financial advice, even if it has information on the topic. This prevents the AI from venturing into dangerous or liability-inducing territory.

If brand contextual AI data is so importance, why isn’t this the norm with all AI companies?

That question gets to the heart of the challenges in the AI industry. The short answer is that building a robust, contextual data layer is difficult, expensive, and runs counter to the rapid development cycle that many AI companies have adopted.

This answer surprises no one but the longer answer explains why this isn’t a universal practice.

1. The Engineering and Technical Challenge

Developing a contextual data layer is a massive undertaking. It’s not just about adding a new feature to a model. It requires building a complete, separate system that can:

- Ingest and clean data from various sources in real time.

- Structure that data in a way that is easily searchable and understandable by an AI model.

- Handle massive scale, processing and retrieving billions of data points in milliseconds.

- Constantly update with new information to prevent the data from becoming stale.

This is a complex and resource-intensive engineering problem that is often more difficult than training the initial large language model itself. The solution requires creating an AI Agent – not just a bunch of AI tools that are automated. The difference between creating an AI Agent versus an AI tool is like the difference between creating a robot that can figure out how build a home and building a specialized hammer (a tool). Building an AI Agent that can develop an accurate brand context AI data layer is expensive and very very difficult.

2. The Financial Cost

The computational and human resources needed for a contextual data layer are significant.

- Data acquisition: Creating or acquiring high-quality, domain-specific data is expensive.

- Infrastructure: The data layer requires its own powerful infrastructure, including vector databases and specialized retrieval systems, which adds to cloud computing costs.

- Talent: It demands a specialized team of data engineers and scientists, who are among the most sought-after and highly paid professionals in the tech industry.

Most typically, this approach also requires building an AI Agent (not just an AI tool), which takes even more time, running counter to the competitive AI space that prioritizes getting a product to market quickly. The extra time requires investing heavily in a complex data layer can slow that process and increase costs dramatically – something investors have little patience for.

3. The “Race to Market” Mentality

The AI industry is moving at an incredible pace. Companies are under immense pressure to release new models and features before their competitors. In this environment, the strategic focus has often been on building a bigger, more powerful model rather than on creating a more factually grounded, context-aware applications.

Many companies understand that hallucinations are a “known issue” that they think they can address later, after they’ve established market share. The philosophy means short cuts are taken so they can iterate quickly and then, presumably, improve the product after launch. The trade-off means sacrificing factual accuracy in the short-term and overall trust in AI in the long-term. Great for investors – not so great for users.

The Brand Contextual Data Technology Break-through of Topic Intelligence™.

Customers and businesses are demanding more reliable, trustworthy AI, which is forcing a new look at AI in marketing. At Topic Intelligence, we developed a Brand Contextual AI Engine designed especially for acquisition marketing – the highest performance bar marketers need and where AI is well suited to optimize. ROI-sensitive businesses like eCommerce cannot accept the inaccuracies of AI misfires because it can undermine marketing outcome requirements.

Topic Intelligence, with its proprietary brand contextual AI data engine – powered by an internally develop AI Agent, is built as an integrated system to both deliver great outcomes while continuing the leverage all the advantages of AI.

Core modules of the Topic Intelligence platform:

- Topic database with the contextual technology that understands how to translate web content into contextual content that humans understand.

- Topic engine that maps out the best topics to drive precise conversion mapping. This topic map gives brands a topic-centric resource allocation model to optimize results.

- Analytics module provides a powerhouse of performance insights around the topic journey to conversion.

- A contextual brand LLM to apply to all marketing functions.

The image below describes the data and process flows (using our developed contextual AI Agent). The architecture ensures AI stays in the right brand “mindset” as it creates programs to meet a brand’s communication goals.

Figure A

The Business ROI of Topic Intelligence™ to Drive Outcomes

The advantages of Topic Intelligence are that it optimizes the efficiency inherent in AI with high performing, quality outputs for repeatable and sustainable programs. The platform finds the right topics to move audiences along their topic journeys:

- Best topics based on engagement

- Best performing topics for conversion

- The topic journeys to conversion

- Performance of topic-based marketing campaigns with attribution

- Brand AI intelligence designed to prevent hallucinations or misfires

The vital importance of a brand contextual AI data layer translates into an AI efficiency boost with increases in the accuracy and quality of AI output. This fundamentally improves AI trustworthiness and therefore its value in driving a brand’s marketing outcomes.

- Increased Accuracy in AI Predicting Useful Topics: When an AI is able to “understand” the prospect topic journey, it can consistently be more accurate and relevant in supporting customers in their discovery journey. This leads to higher performing campaigns.

- Enhanced Brand Consistency: The contextual layer ensures that every AI-generated marketing campaign is aligned with the brand’s official voice, tone, and values. This creates a cohesive and high-performing brand image.

- Improved Efficiency and Reduced Costs: By enabling context-enriched AI to handle complex, knowledge-based tasks accurately, a brand can significantly reduce the workload on its human marketing teams. This leads to higher efficiency and lower operational costs because the output can be trusted.

- Risk Mitigation: The prevention of hallucinations due to contextual guardrails protects a brand from serious reputational and financial damage. A single, false statement from an AI—such as an incorrect product claim or a misguided health recommendation—can have severe consequences. The contextual layer is a critical component of a robust risk management strategy.

Conclusion

It’s fair to think AI is as transformative now as Internet was in its day. Then, like now, technology offers powerful tools for brands to activate to drive business. Again, then as now, the new technologies often have a dark side due to trust gaps.

But unlike before, AI does not have to descend into the trust chasm that befell much of adtech. Instead, contextual AI serves as the essential bridge between an AI’s raw intelligence and a brand’s specific needs. By grounding AI in brand facts, a contextual layer, AI’s potential can be truly become a powerful, reliable, and on-brand asset.