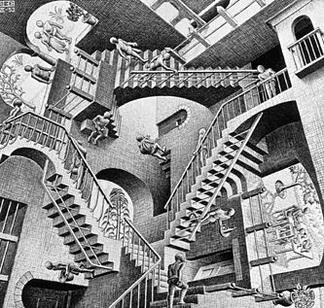

Relativity, 1953 – M.C. Escher

This famous image is an example of a mind puzzle. Everything looks as it should, staircases in a building. But look closer and you realize something is amiss. The relativity of space is all wrong and this staircase cannot exist in the real world.

For me, this image by M.C. Escher (1898 – 1972) stands as a metaphor for a future with AI – if we are not careful.

We already see how AI can hallucinate to generate unrealistic outputs. We see how mind-numbingly lame the results can be when AI is used at an industrial scale. Twitter’s adoption of AI to “summarize” trending topics gives us formulaic paragraphs ranging from the absurd to downright misleading. They all start the same:

“In a surprising turn of events, …”

“In a tragic turn of events…”

“In an unexpected turn…”

“In a shocking turn of events…”

“In a bold turn of events…”

“In a significant turn…”

“In a controversial turn…”

The AI summary proceeds to describe a “both sides” summary that is often misaimed and sometimes dangerous. Tragedy should have no “other side” to it. Bad events need to be framed as bad. But AI has no such contextual intelligence. Instead, we see a neutrality in these summaries despite situations that are clearly black or white. In the real world, people can’t have an even-handed opinion all the time – it is antithetical to the human spirit – impossible – just Like our Escher image.

A reasonable person would expect AI to get better at creating the needed, nuanced context.

A reasonable expectation indeed, except for the niggling reality that, sooner (not later), AI will run out of original content to train on. It is already running out of quality content. Business Insider explains it this way: “More is more when it comes to AI. The more data AI systems are trained on, the more powerful they will be. But as the AI arms race heats up, tech giants like Meta, Google, and OpenAI face a problem: They’re running out of data to train their models…By 2026, all the high-quality data could be exhausted, according to Epoch, an AI research institute,” (April 7, 2024).

This is no surprise to those of us working in AI.

This means the great, mad content land grab is on.

Tech giants are using different approaches to solve this AI training issue. Google is tapping content available in Google Docs, Sheets, and Slides. It also could explain why Google is considering buying Hubspot, (source: https://finance.yahoo.com/news/google-parent-alphabet-eyes-hubspot-152731371.html). It provides Google with a deep trove of brand content.

Other firms are considering buying up publishers in toto to chow down its content. Yet others are trying to figure out how to take all the videos and images are out there from near dead or long dead platforms like Photobucket, Myspace and Friendster and convert them into content that can be used to train AI. (These carcasses live on like some apocalyptic Walking Dead episode of the data world.)

Despite the land grab, these strategies to acquire content just delays the inevitable given the amount of data current training models need.

The answer therefore to solve the training problem long-term is obvious – companies will use AI to train itself.

Here is where our reality starts to turn in on itself.

AI will create realities in its math brain. Just like M.C. Escher, it will construct faux realities that cannot exist in the real world. Humans, for our part, simply don’t have the processing power or the tools needed to parse out the “Escher-ness” of content from content that is true.

This is the real risk profile of AI.

I don’t worry that AI-powered robots will be taking over the world. That is stuff of fear fiction.

I worry far more about a more boring risk profile, namely; AI will be used to train other AI models thus further fraying our tenuous link to the real world.

Our ability to distinguish what is real or what is an AI version reality is already under pressure. With AI training “itself,” this leaves us with deeply distorted view of reality much like an Escher image, an impossible “reality” where we are perpetually trapped with no hope of escape.

This is the true risk of AI content. This is where our work at the Trust Web is centered. In collaboration with the National Science Foundation we are creating a vital tech bulwark so people can retain their link to the real world even as AI creates a new reality in our name.

In the 1970s, there was a spot for Memorex (recording audio tape) that asked: “It is live or is it Memorex.” With AI, this question takes on a new urgency and poignancy in our AI driven age.